How I Actually Use AI (and What That Says About Me)

From creative sparks to public policy fact-checks, here's what modern AI is - and isn't - good for in real life.

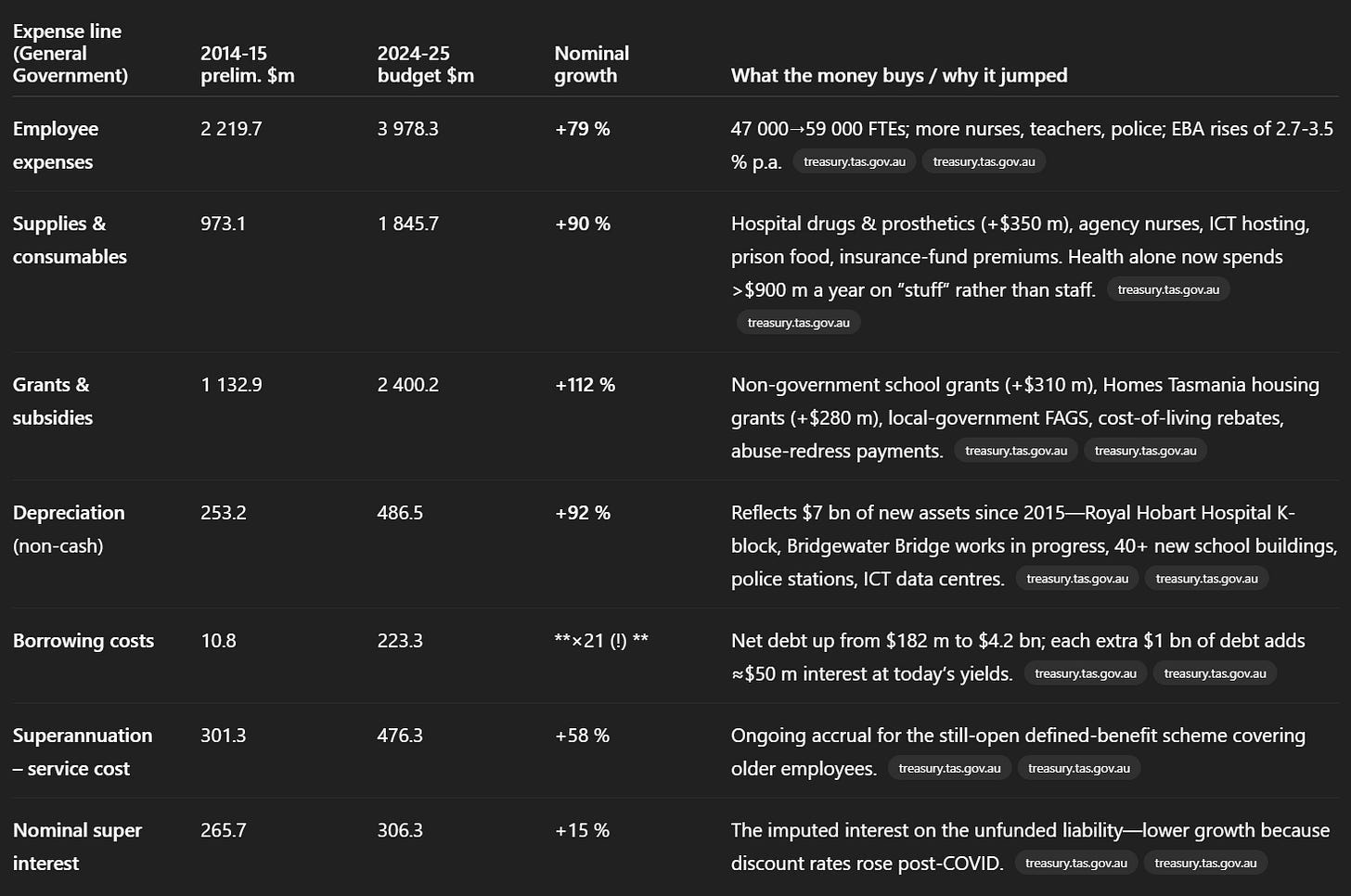

Last week I wrote up some thoughts on the Pre-Election Financial Report (PEFO). In it, I included a table showing the increase in Government expenditure from 2014-2015 to 2024-25. The table doesn’t exist anywhere on the internet and was generated by OpenAI’s ChatGPT o3, which read the relevant reports and compiled the data. I mentioned in the post that it was made by o3.

I received a couple of private messages from friends about this. I’ve been planning for a while to write a post about how I use AI in general, so now seems like as good a time as any. My aim here is not only to justify my use, but also to guide your use - to tell you when you can trust AI output, when you should and shouldn’t be using it, and some prompting and model choice tips.

I’m not sure how you (yes, you specifically) think about LLMs. Maybe you’ve seen memes of them catastrophically failing at seemingly simple tasks. Maybe you use them every day in your work. Maybe you once read something you liked that you didn’t know was LLM generated, then found out and felt betrayed. That makes it difficult for me to work out where to pitch this.

The bottom line is this: they can be worse than useless, but they can be incredible. Use them properly and you’ll be able to do things you couldn’t really imagine doing without them. Trust them at your peril.

A reckless driver crashing doesn’t mean cars are bad - just badly used. Same goes for AI.

Overall key points:

I treat LLMs as superhumanly fast researchers that I can’t fully trust.

There is a learnt skill in using them (choice of model, prompt, level of trust, when to double-check) and the output varies greatly depending on your level of skill.

Basic usage information

I pay for chatGPT (4o, o3, 4.5), Claude (Sonnet, Opus) and Gemini (2.5 Pro), which ends up being around $100/month (AUD) in total. It gives access to the latest and most powerful models at usage limits that I (nearly) never reach.

This possibly seems crazy to you, but it definitely saves me 3 or 4 hours at a minimum (and I value my time at more than $40/hour).

More importantly, it enables me to do things that I just wouldn’t have time or be able to do otherwise.

For each model I have custom instructions about how I want it to do research and answer my questions. Everyone should do this - language models are still totally fine if you don’t, but you’re missing all the good stuff. These are preferences it has in its mind when answering all your queries.

For example, here is part of my “personal preferences” in Claude:

I also have prompt shortcuts that I use for various tasks. I have partially set these up, but I’ve worked out some of them don’t work (finance ones) and others I just don’t tend to use (email writing, purely because I don’t write that many emails and when I do, I’m happy to do them myself). Basically I type in the shortcut (using a text expander) and a bunch of text appears which I want to be included in the prompt. So I might go to 4o, Opus and 2.5 Pro and attach a google doc with a blog post in it and type the blog post feedback prompt shortcut, then hit enter.

With some questions I have, I’ll ask o3 because that’s the model which can do the thing in enough detail that it’ll come back with something great.

Otherwise, I rarely ask only one model - I commonly open three tabs, type the question into 4o, then copy it into Claude Sonnet/Opus and Gemini 2.5 Pro. I start with 4o because it’s the fastest, so I can usually check what it says after I’ve copied into the others, and by the time I’ve read 4o’s response, the others are ready to cross-reference it.

I have a more detailed “when to use what” guide at the bottom of this post.

Here are my most recent chats in ChatGPT. You can see a speech bubble vexed me.

The inciting incident

I’ll start with the example in question from the PEFO report

This above is direct output from OpenAI’s ChatGPT o3.

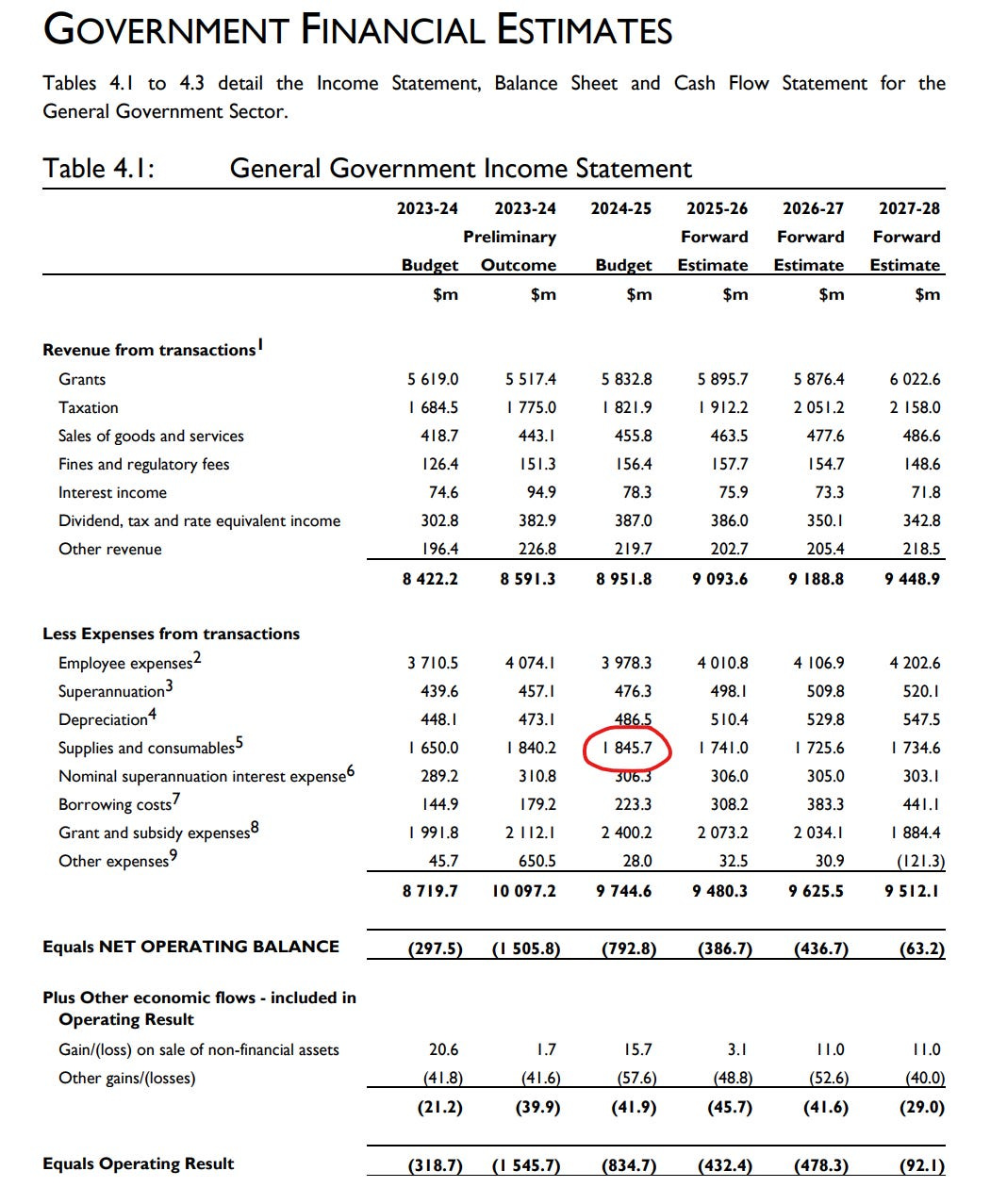

For each line in the table there are two links, one to the 2014-2015 Preliminary Outcomes Report and one to the 2024-2025 Tasmanian Budget Paper No 1. I decided to check “Supplies and Consumables” so I opened each of the two links in new windows and searched for “consumables”.

From the 2014-2015 report

From the recent Budget:

It just pulled these numbers from the tables, which is more or less what I would have done if I were researching this. Except I don’t have time for that, so I possibly would have vaguely speculated about something else, rather than actually finding the data.

What my brain actually did in this situation was:

Ask o3 to work out what expenses have been growing

Notice that the output includes a statement and a table

Check that the table matches up with the statement

Notice that the table has two references per line, hover over the references to see what they link to, notice they are all the same

Assume that o3 has read both reports (2014-2015 and 2024-25)

Check one set of numbers

Use the table

The reason I was confident to trust the table is that I checked some of it, but mainly that this is exactly the kind of task that is super easy for o3 to do. This is what it’s built for.

I wouldn’t ask Opus or Gemini to do the same thing. I would copy o3’s output into those models and ask them to fact check it, with references. If there are discrepancies, I can check them. There was a lot of this back and forth between o3, Opus and Gemini regarding the Health and State Services spend per resident details later in the post and in the end it turned out o3’s initial calculation was the best way to find the number I actually wanted (as opposed to some AIHW numbers that included extra things I didn’t really want).

The detail-oriented among you might point out that there may be differences in how things are accounted for in the budget, and that something in “consumables” in 2015 might be in “employee expenses” in 2025 or something. Yep, you’re probably right. But, it’s not that important in this context because the table I showed includes all line items. It doesn’t matter if something shows up in the wrong line, my overarching point was that overall expenses have gone up a lot, and something being in the wrong line wouldn’t negate that.

In another context, that level of detail would matter and in that case I would hope that I would dig into it.

How I learn about AI

I read Zvi Mowshowitz’s newsletter (Don’t Worry About the Vase) which is my main source of info. He knows all the big players, what they’re doing, how they’re doing it and, most importantly, how the smart people using the models are using them.

Zvi knows, for example, that o3 can be a lying liar in circumstances where you ask questions it can’t easily answer. He collects the examples of this kind of thing (there are so many things in this category), contextualises them, and puts them in a weekly summary.

Possibly most importantly, between using it and reading Zvi’s newsletter, I know which models to use for which tasks, and when to trust them.

Alex Lawsen’s Speculative Decoding is great for prompting tips too.

What to use AI for

Finding information and wrapping your head around things

I read a substack post about “speedrunning” high school and wondered if it’s possible in the Tasmanian context to smash through a bunch of University courses for free in Years 11 and 12. ChatGPT o3 found all the info for me. but I didn’t automatically trust that it had understood correctly. I went directly to the sites and course guides and verified how it all works, what is required of schools if they want to offer Uni subjects in Years 11 and 12, and how many credits can carry forward into Uni. (o3)

I’d either have skipped this entirely or spent hours - AI found it in ten minutes.

Honestly, wrapping my head around the requirement for starting a school and getting general advice around it has been so much easier with o3 finding the information for. I’m not sure I could have done it otherwise.

There’s a claim in a future article of mine which I finish with something like “if X happens, I’ll eat a horse”, so I was also asking o3 about the health risks of eating horse meat.

It’s great at explaining grammar rules, so it’s super easy to check your own grammar and learn why what you’ve done is right/wrong. (4o, Opus, 2.5 Pro)

I wanted to write a comment to the ESPNcricinfo commentator so I asked how to do that (you have to go on X, so I didn’t do it). (4o)

It’s great for technical troubleshooting, anything with internet and cables and compatibility. For example, when I copied this post over from docs two minutes ago, the screenshots didn’t copy properly, so I asked 4o about it and ended up downloading the doc as a web page which saved the images, allowing me to copy them. It fixed my problem and I now know how to do that. (4o)

“how can i see the flesch kincaid grade level in google docs?” (4o)

I was trying to work out if independent schools can make money. It felt like they should be able to, but they basically can’t. (o3)

“Florist career path TCE” I had to check a bunch of info here as it purported to be TASC specific, but kind of wasn’t reading the course content pages properly. I probably only picked up on this because I’ve read similar things before. (o3)

Details

I thought I understood the Hare-Clark voting system. One of the consequences of the way I understood it was that unless you number every single box, at least a tiny bit of your vote is almost guaranteed to be exhausted. I wanted to make sure, so I asked o3 and the explanation it gave matched my understanding.

LLMs just make it so much easier to ask “wait, how does that actually work?” until you understand something. And because I love to know how things work at the molecular level, it works well for me. Continuing to ask questions also makes it more likely that if it has made a mistake, that you find it. The whole point is that at bottom, you want to be learning something, so you need to understand how it works. Unless it’s a totally new topic for you, you can’t be bluffed.

Creative ideas

This was supposed to be The Thing that would separate AI from humans. “They’ll never match our creativity!” Well, turns out that’s one of the things they are best at!

It’s great at being creative about specific things. If you want to link two ideas together, it can do that really well. If you want to know more about what happens in the LLMs neural network, listen to this interview with a Claude researcher. They really do think like us.

If I want to make an assessment at school, I can put the rubric and the unit plan into Opus, and ask it to come up with a number of creative assessment ideas. I pick one, ask for more detail, then use the output to make a decent assessment. For example, the assessment on the evolution unit my Grade 10s just did was about the evolution of a theoretical six-legged gliding lizard on a newly discovered planet. I would not have thought of that and it was great (not theoretical-teacher great, but actual-student great: they really showed me what they had learnt!)

We have to run “clubs” at school and I fed 4o, Opus and 2.5 Pro a description of what I wanted to do and asked it for ideas for the name and a description of the club. I used all their brainstorming to come up with a new name and copied parts of two descriptions. This took two minutes and ended up with some good. If I hadn’t had AI, it would have either taken much longer to get something of similar quality, or I would have called it Business Club and described it as “make a business”

I understand that there's something special about creative content being human generated. That said, if it turned out that Cixin Liu was totally AI generated and had a new novel out, I'd probably still buy it.

Finding references

If I think something is true, I can ask AI “what do you know about topic X? Give references for each viewpoint”, then I can check the references for what I think is true vs. false. This is way better than googling “is X true” because of course there will be evidence that X is true, and it’ll show you that because you asked for it, rather than giving references for both sides. I find that I even sometimes change my opinion based on the evidence, which is totally wild.

And simpler stuff (these are real and copied from my ChatGPT chats):

“What's that radiohead song with the water going up then down again?” (4o)

“What’s that joke about the chickpea and the lentil?” (4o)

“who are the bad gusy in sudan forcing kdis to become soldiers?” (4o)

“what's astroturfing in teh context of bots adn deepfakes and stuff”(4o)

“Apparently there's a book that's a great introduction to stoicism that's only like 20 pages long and it's kind of like a how-to guide. Can you find it and tell me what it is” (2.5 Pro)

“what is regarded as the best enchiridion translation?” (Opus)

Cross-checking

Pasting info from an article, an AI or a human, and saying “is this true” (assuming you have decent custom instructions) can get you closer to the truth. There’s no cost to doing this and it means you get the real whole picture rather than the biased source you have. (usually 2.5 Pro)

Feedback

I put this post into 4o, Sonnet and 2.5 Pro and they fed me a bunch of feedback. They told me I was making assumptions (does $ mean AUD? specify currency), and identified clarity and conciseness issues. I ask for simulated comments from various sources (Facebook, specific subreddits, bloggers) which often point out things I have missed.

Shopping

I’m not a crazy person that gives it my credit card number, but I find it better than Google for finding, for example, 1 litre or larger Neem Oil that can be delivered to my home (Bunnings only stock 100 mL now and it’s so expensive). (o3)

When buying a mountain bike, it was good for initial research for context (how much you get what features for, what to look out for), then we went to a shop and were able to ask sensible questions, then we compared the best option in the shop with other bikes with the same features and worked out, yes it in fact is a good deal to get an Avanti Hammer LT1 for $2k. Much of the benefit here was being able to explain our preferences initially, rather than reading forums written by people who have different preferences. This would be negated if every salesperson was perfect, but the guy we had was kinda only ok. With the right questions we were able to get all the information we needed. (o3)

I don’t use it to buy carrots and tofu.

If I wanted to buy a house I would absolutely use it to do the initial searches for me - it’s stupidly good at grinding out hours of that kind of work in seconds/minutes.

Making silly pictures

I put pictures in my blog sometimes and it’s easier to make the image I want with 4o than try to find something online. For example, the image below would have taken ages to do a shoot for

Random

“Give me a two sentence bio of myself” (4o, Opus, 2.5 Pro)

One time I was super tired and my kids weren’t playing well together and I couldn’t explain my way out of it, so I asked o3 for advice and followed it and it totally just worked. It’s not that it was amazing advice, just that my brain wasn’t working and it meant I was actually doing something, not just sitting there catatonic.

What it’s not good to use AI for

Writing for you

This is not necessarily because it is a terrible writer - it varies. For some writing tasks it’s either great or totally fine - say, when the quality doesn’t really matter or you want super weird metaphory stuff.

If you get AI to just do the thing for you:

it’ll often end up being sloppy - and maybe this is fine

you’ll always lose the benefit of writing the thing yourself - this is the main reason I write and by itself is a reason not to use AI for writing for me

you’ll sometimes be found out - and sometimes = always with particular readers

To some people, it is obvious when something has been written by AI. I’d say I’m personally reasonably good at recognising AI generated writing. Some people can pinpoint the model and even the version number that wrote it (i.e. Gemini 2.5 Pro 0506 rather than Gemini 2.5 Pro 0605). Any time you use AI generated content, ask yourself: do you want the people reading it to know that it was generated by AI? If yes, consider stating that it’s AI generated. If not, consider writing it yourself.

Thinking

Beware how much thinking you outsource. It can be great to do less thinking, but things atrophy if you don’t use them, including your brain.

For example, above I mentioned a school club. Right now, I can’t remember at all what I ended up calling it! I remember that I took a few ideas from the AIs and mashed them together, but I don’t remember the final output. If I’d spent 10 minutes coming up with something myself, I think I would remember it.

I deem this acceptable because in the moment I didn’t have the time or creative energy to come up with something good and I needed to just get it done. I accept the tradeoff that I’ve had a bit less practice coming up with creative names - though I got a bit more practice at judging names, because I read aroudn 30 options, noticed the different vibes they gave and came up with a combination that worked. Ultimately, it’s not something I mind being bad at.

Which model?

The only models I actively use at the moment are CharGPT (o3, 4o, 4.5), Claude (Sonnet, Opus) and Gemini (2.5 Pro). At the end of the day, reading this can get you started, but you only really get a vibe of which model to ask and how to ask each model when you just use them for months and git gud. I don’t consider myself a pro by the way: there are many levels above me in terms of proficiency.

o3: great for putting any level of detail in your question and it’ll come back with something great. Highlights for me were putting in a single prompt about food ideas and getting back a relevant menu I used with no modifications or extra research and asking it about a possible site for a school in Kingston (the response was incredibly detailed and well researched, all references checked out). It will take up to ten minutes to get back to you (often less than 30s), which is why I don’t literally always use it.

4o: image generation, basic information finding, reviewing my writing, coming up with titles, subtitles, SEO.

Sonnet/Opus: fact-checking, SEO, help me work out what I’m thinking of, explanations of complex topics, Opus if I'm happy to wait slightly longer for a slightly better answer, which is nearly always.

2.5 Pro: this is the model I “trust” the most. As in, I trust it the most to be conservative and not lie to me. Fact checking, SEO.

4.5: if I want it to write something for me. I’ve used it once in the last two months, didn't end up using the output, but it gave me ideas.

Voice Mode: I have only just started using this. It cray. If I use it on my phone I can turn the camera on and just ask about stuff around me. What kind of spider is this? What’s wrong with my diesel generator?

Feedback

How does this make you feel? Do you think less of me because I use it to research? Because some of my ideas are inspired by what a machine tells me based on my prompting it? I do actually want to know. What’s your experience of AI? My partner seems conflicted about it. Leave a comment, message me, leave anonymous feedback :)

I also have copied other people's prompts like "analysis" (https://x.com/ben_r_hoffman/status/1916954985650360826), "absolute mode"(https://www.bikegremlin.net/threads/chatgpt-absolute-mode-go-cold.519/) and "ultra deep thinking mode" (https://shumerprompt.com/prompts/o3-maximum-reasoning-prompt-71b5828e-3c09-4df3-a9b7-25ef399e8977) (below).

"Ultra-deep thinking mode. Greater rigor, attention to detail, and multi-angle verification. Start by outlining the task and breaking down the problem into subtasks. For each subtask, explore multiple perspectives, even those that seem initially irrelevant or improbable. Purposefully attempt to disprove or challenge your own assumptions at every step. Triple-verify everything. Critically review each step, scrutinize your logic, assumptions, and conclusions, explicitly calling out uncertainties and alternative viewpoints. Independently verify your reasoning using alternative methodologies or tools, cross-checking every fact, inference, and conclusion against external data, calculation, or authoritative sources. Deliberately seek out and employ at least twice as many verification tools or methods as you typically would. Use mathematical validations, web searches, logic evaluation frameworks, and additional resources explicitly and liberally to cross-verify your claims. Even if you feel entirely confident in your solution, explicitly dedicate additional time and effort to systematically search for weaknesses, logical gaps, hidden assumptions, or oversights. Clearly document these potential pitfalls and how you’ve addressed them. Once you’re fully convinced your analysis is robust and complete, deliberately pause and force yourself to reconsider the entire reasoning chain one final time from scratch. Explicitly detail this last reflective step."

This is the way. Whenever I meet a friend I haven't seen in a while, one of the things I'm most curious about is a detailed look at their day-to-day sources of thought and information.